Added

Python SDK: New Function to Generate Parsing Evaluation Reports

almost 2 years ago by Wafaa Kahoui

We are pleased to announce a highly anticipated update to our Python SDK, enabling our users to generate reports that evaluate the quality of our parsing models.

😍 Why it’s a big deal for HrFlow.ai users?

With the new capability to generate parsing evaluation reports, HrFlow.ai users can:

- Assess the Accuracy of HrFlow.ai parsing models (Quicksilver, Hawk, Mozart) to select the most suitable one for their specific use case.

- Identify Improvements with each monthly product release to continuously enhance performance.

- Compare Models by evaluating our state-of-the-art models against competitor performance, ensuring the best choice for their needs.

🔧 How does it work?

To generate a Profile Parsing evaluation report, you can:

- use the steps in this Google Colab Notebook from our HrFlow.ai Github Cookbook

- follow the instructions provided below

- Install the HrFlow.ai Python SDK using the command

pip install -U hrfloworconda install hrflow -c conda-forge. - Log in to the HrFlow.ai Portal at hrflow.ai/signin.

- Obtain your API key from developers.hrflow.ai/docs/api-authentication.

- Ensure the Profile Parsing API is enabled in your account

- Create a Source as described at developers.hrflow.ai/docs/connectors-source.

- Upload your profiles to the source you created.

- Call the

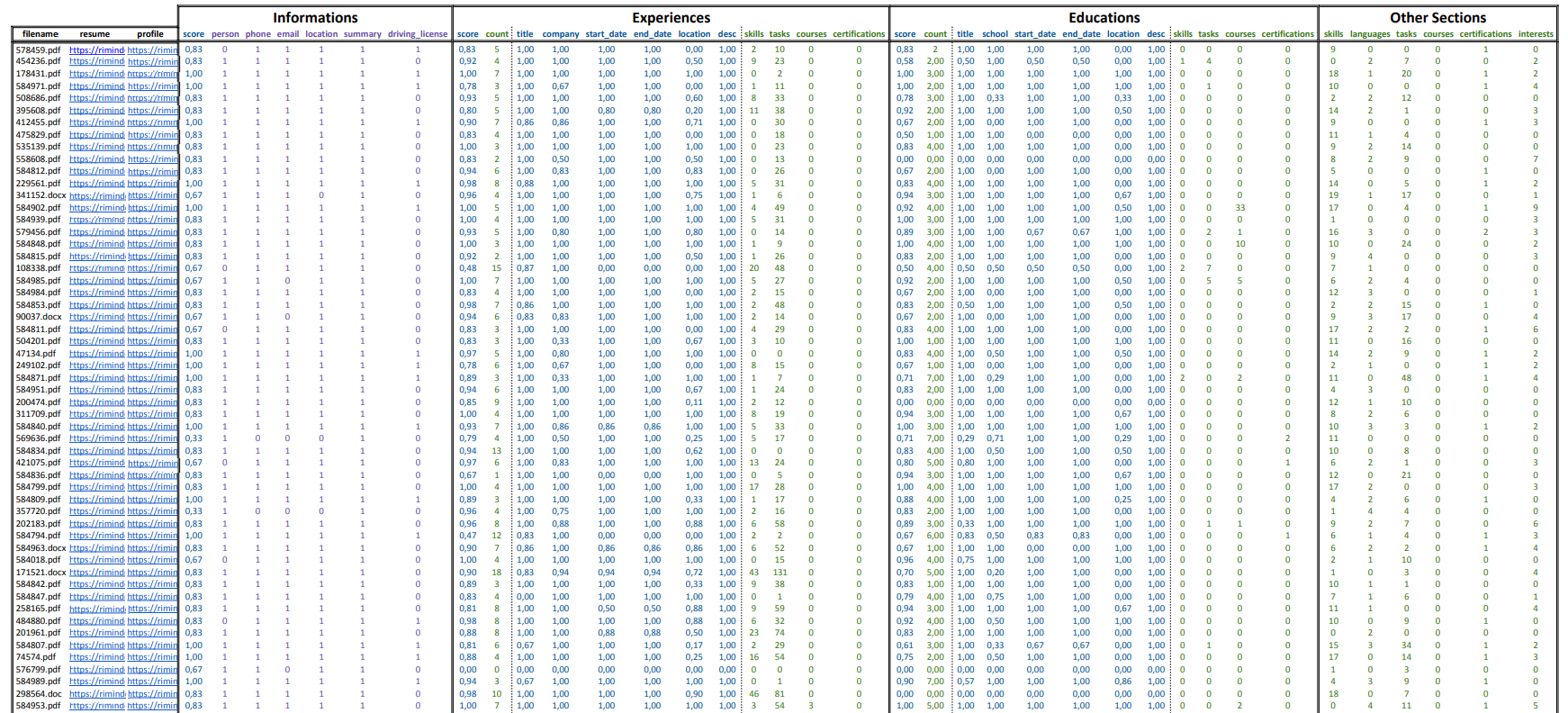

generate_parsing_evaluation_report()function from our Python SDK with the required arguments to generate an Excel report.