📊 Evaluate a Board of Jobs

Learn how to evaluate the quality of HrFlow.ai's job parsing on your job postings.

This guide offers a comprehensive approach to generating detailed reports and interpreting data from your HR processes. By assessing the completeness and accuracy of your jobs, you can ensure higher quality jobs data, leading to more effective recruitment and talent management.

A. Why Evaluate Parsing Quality?

Understanding the quality of parsing models to accurately detect and fill various fields in a job posting is crucial for several reasons:

- Assess Model Performance: Determine HrFlow.ai parsing model on your specific use case.

- Benchmarking: Compare HrFlow.ai's models against competitors to make informed decisions.

B. Step by Steps

B.1. Initialize the HrFlow Client

Prerequisites

- ✨ Create a Workspace

- 🔑 Get your API Key

- HrFlow.ai Python SDK version 4.1.0 or above: Install it via pip with

pip install -U hrflow>=4.1.0or conda withconda install hrflow>=4.1.0 -c conda-forge.

First, initialize the HrFlow client with your API credentials.

from hrflow import Hrflow

from hrflow.utils import generate_parsing_evaluation_report

client = Hrflow(api_secret="your_api_secret", api_user="your_api_user")B.2. Ensure Your Data is Ready

Before generating the parsing evaluation report, you need to ensure that your data is ready. This involves parsing job postings and storing them in a board.

Make sure you have created a board and parsed jobs stored in this board. For more details on creating a board, refer to the Connectors Board Documentation. For more details on creating a board, refer to Job Parsing.

B.3. Generate the Parsing Evaluation Report

Use the generate_parsing_evaluation_report function to create the evaluation report.

generate_parsing_evaluation_report(

client,

board_key="YOUR_BOARD_KEY",

report_path="parsing-evaluation.xlsx",

show_progress=True,

)Field Explanations:

| Parameter | Type | Example | Description |

|---|---|---|---|

client | Hrflow | client = Hrflow(api_secret="your_api_secret", api_user="your_api_user") | The HrFlow client object initialized with your API credentials. This client is used to interact with the HrFlow.ai API and is necessary for authenticating and authorizing the API requests. |

board_key | str | "YOUR_BOARD_KEY" | The key identifying the board where your job postings are stored. This key is unique to the board and is required to specify which set of job postings you want to evaluate. |

report_path | str | "parsing-evaluation.xlsx" or "/path/to/directory/parsing-evaluation" | The file path where the evaluation report will be saved. This can either be an existing directory, in which case the report will be saved as parsing_evaluation.xlsx within that directory, or a complete file path. If the provided path does not end in .xlsx, the function will automatically append the .xlsx extension to the path. |

show_progress | bool | True to show the progress bar, False to disable it. | A flag to indicate whether a progress bar should be displayed during the generation of the report. This can be useful for monitoring the progress, especially when processing a large number of job postings. |

B.4 Interpreting the Results

The generated report is an Excel file named parsing-evaluation.xlsx that provides a detailed assessment of the quality of parsing models to detect and fill various fields in job postings stored in the specified HrFlow board.

The report consists of two sheets:

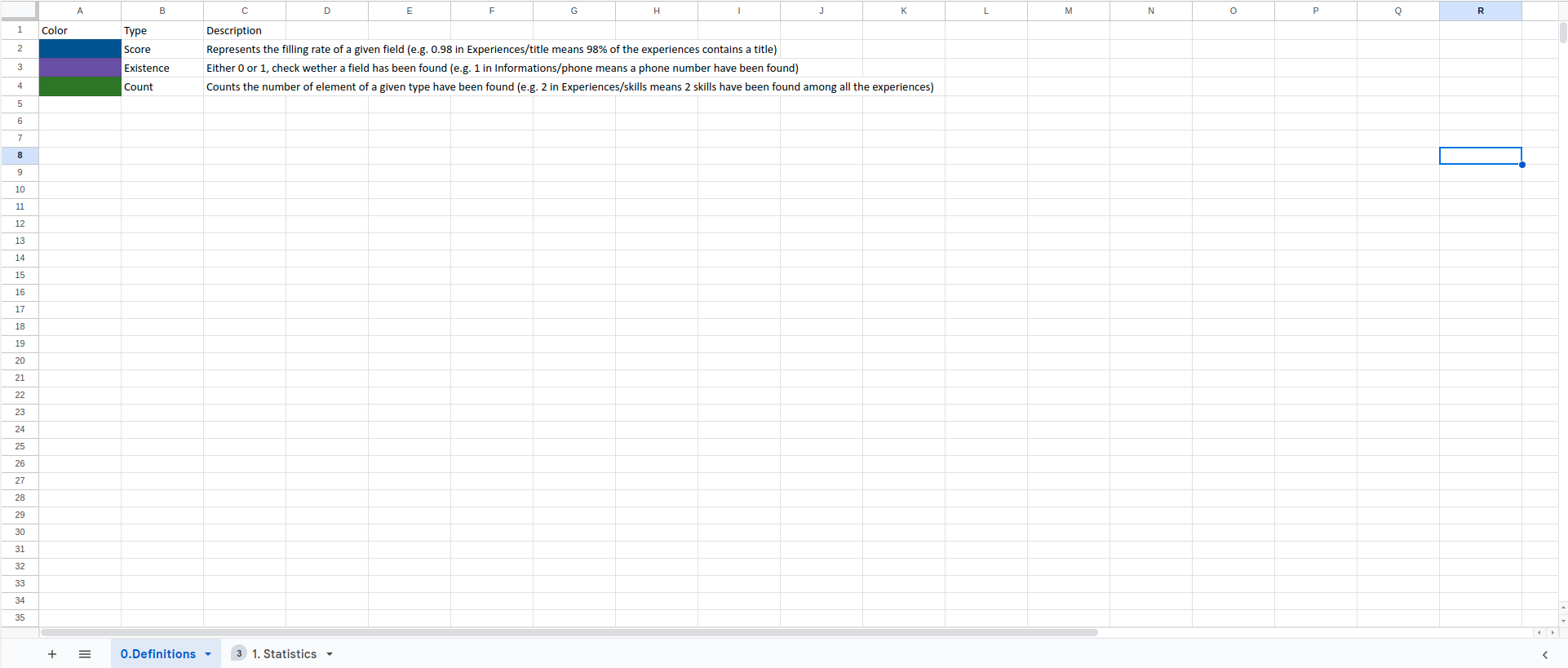

-

Definition: This sheet explains each field and how to interpret the results.

- Score: Represents the filling rate of a given field (e.g., 0.98 in responsibilities means 98% of the job postings contain a responsibilities field).

- Existence: Either 0 or 1, checks whether a field has been found (e.g., 1 in location means a location has been found).

- Count: Counts the number of elements of a given type that have been found (e.g., 2 in skills means 2 skills have been found among all the job postings).

-

Statistics: This sheet presents a comprehensive set of results, offering insights into parsing quality across various sections such as job overview, ranges of floats, ranges of dates, and other sections.

-

Overview:

- score: Overall score for the overview section. It's an average of all overview score fields.

- name, location, summary, culture, benefits, responsibilities, requirements, interviews: Scores for the presence and completeness of each field within the job overview.

-

Ranges of Floats:

- score: Overall score for the float range section.

- count: The number of float ranges detected.

- name, value_min, value_max, unit: Scores and values for the presence and completeness of each field within the float ranges.

-

Ranges of Dates:

- score: Overall score for the date range section.

- count: The number of date ranges detected.

- name, value_min, value_max: Scores and values for the presence and completeness of each field within the date ranges.

-

Other Sections:

- skills: Total number of skills detected across the entire job posting.

- languages: Total number of languages detected.

- tasks: Total number of tasks detected.

- courses: Total number of courses detected.

- certifications: Total number of certifications detected.

-

Key Points to Note

- Scores Reflect Completeness: A low score may indicate missing information in the job postings rather than poor parsing performance.

D. Additional Resources

- Feature Announcement

- HrFlow.ai Python SDK on PyPI

- HrFlow Cookbook: Repository containing helpful notebooks by the HrFlow.ai team

- Parsing Evaluator Notebook in Colab

Updated 8 months ago