➡️ Send data from a Tool into HrFlow.ai

Learn how to sync Talent data from a Source or a Board into HrFlow.ai .

The new HrFlow.ai Workflows feature allows you to synchronize a set of data between your databases and the HrFlow.ai servers. Whether you need to continuously run some routines or have a triggered execution both scenarios are possible with Workflows.

This page will be dedicated to the sending of data from a tool of your company (for example an ATS) to HrFlow.ai.

RemarkThe data synchronized here will be job ads, but this also applies very well to profiles.

PrerequisitesTo fully understand this part, it is necessary to have read the following pages:

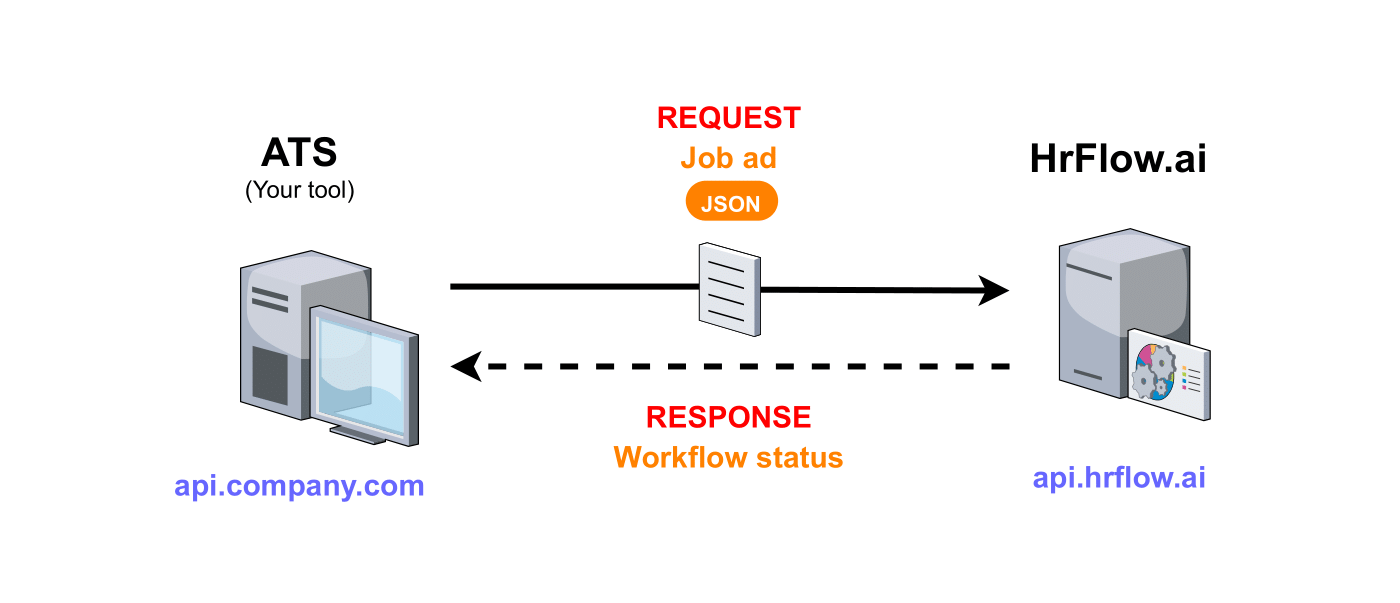

Case Study 1: Receiving a Job opening request from an ATS in HrFlow.ai

This diagram illustrates the communication between your company's ATS and HrFlow.ai

Step 1: Choosing the Trigger

To make the case study generic and keep it as simple as possible, we will communicate with a tool created for the demonstration.

Let's consider that each time you create a job ad in your ATS, it triggers an event and automatically sends a Webhook (POST Request).

Of course, the request sent by your ATS contains all the information about the job.

Here is the list of fields added by the ATS in the request sent to HrFlow.ai:

| Field name | Description | Type |

|---|---|---|

job_name | Job's name | String |

job_reference | Job's reference | String |

job_url | Application URL | String |

job_summary | Job's summary | String |

job_address | Job's Address | String |

job_latitude | Job's latitude | Float |

job_longitude | Job's longitude | Float |

job_start | Job start date | String formatted as an ISO 8601 date |

job_end | Job end date | String formatted as an ISO 8601 date |

job_salary_min | Minimum salary | Float |

job_salary_max | Maximum salary | Float |

Step 2: Catching the POST request sent by the first party tool

First of all, you will have to create an endpoint in HrFlow.ai to allow your tool to send the job at each mission creation.

To do this, you need to create a CATCH workflow (HrFlow.ai Workflows).

Once created, get the Workflow URL (catch hooks URL).

This URL has the following shape:

https://api-workflows.hrflow.ai/teams/XXX/YYY/python3.6/ZZZYour tool will send a job to this URL.

Your ATS will use this freshly created endpoint to send the content of newly created jobs.

In other words, your tool will send this kind of request each time a job is created in its database:

| Type | Value |

|---|---|

| URL (POST) | https://api-workflows.hrflow.ai/teams/XXX/YYY/python3.6/ZZZ |

| HEADER | Content-Type:application/json |

| BODY | { "job_name":"Data Scientist", ... "job_salary_max":5500.0 } |

Step 3: Building the Automation logic

It's time to code the internal logic of the Workflow.

As a reminder, this workflow is used to add a job in a Board HrFlow.ai (Create, Configure a Board).

First of all, it is necessary to have created a Board.

In the rest of the demonstration, we will use the following board_key: 5bd99cf57594e4f0e8feb038743368676bcdabac.

This key will be stored in the Environment properties of the workflow with the name BOARD_KEY.

Next, you need to be able to connect the fields sent by your ATS and the appropriate fields to create a HrFlow.ai job.

Fields available for a jobYou can find the list of available fields for a HrFlow.ai job in the documentation API REFERENCE.

📖 The Job Object

Connector from the ATS fields to those of a HrFlow.ai object

_request is one of the two parameters of the CATCH workflow function.

job_hrflow_obj = {

"name": _request["job_name"],

"reference": _request["job_reference"],

"url": _request["job_url"],

"summary": _request["job_summary"],

"location": {

"text": _request["job_address"],

"lat": _request["job_latitude"],

"lng":_request["job_longitude"]

},

"sections":[], # deprecated

"culture": "",

"benefits": "",

"responsibilities": "",

"requirements": "",

"interviews": "",

"skills": [],

"languages": [],

"tags": [],

"ranges_date": [{

"name": "dates",

"value_min": _request["job_start"],

"value_max": _request["job_end"]

}],

"ranges_float": [{

"name": "salary",

"value_min": _request["job_salary_min"],

"value_max": _request["job_salary_max"],

"unit": "eur"

}],

"metadatas": [],

}Simple version of the workflow

import json

from hrflow import Hrflow

def workflow(_request, settings):

client = Hrflow(api_secret=settings["API_KEY"], api_user=settings["USER_EMAIL"])

job_hrflow_obj = {

"name": _request["job_name"],

"reference": _request["job_reference"],

"url": _request["job_url"],

"summary": _request["job_summary"],

"location": {

"text": _request["job_address"],

"lat": _request["job_latitude"],

"lng":_request["job_longitude"]

},

"sections":[], # deprecated

"culture": "",

"benefits": "",

"responsibilities": "",

"requirements": "",

"interviews": "",

"skills": [],

"languages": [],

"tags": [],

"ranges_date": [{

"name": "dates",

"value_min": _request["job_start"],

"value_max": _request["job_end"]

}],

"ranges_float": [{

"name": "salary",

"value_min": _request["job_salary_min"],

"value_max": _request["job_salary_max"],

"unit": "eur"

}],

"metadatas": [],

}

client.job.indexing.add_json(board_key=settings["BOARD_KEY"], job_json=job_hrflow_obj)

return dict(

status_code=201,

headers={"Content-Type": "application/json"},

body=json.dumps({"reference": _request["job_reference"]})

)

Don't forget to set the environment properties !In the environment properties, the following constants must be defined in order to use them with the

settingsparameter of the workflow function:

API_KEYUSER_EMAILBOARD_KEY

To know more about it : HrFlow.ai Workflows

After its execution, this workflow returns the following response :

| Type | Value |

|---|---|

| ERROR CODE | 201 (SUCCESS) |

| HEADER | Content-Type:application/json |

| BODY | {"reference": "..."} |

A way to secure the communication with the workflow

As the workflow URL is public, anyone with the URL can add a job to your Board HrFlow.ai.

To be sure of the origin of the request, we can add a signature to it.

First, a private key is shared between your tool and HrFlow.ai.

Here are the steps to prepare the request and send it:

- Just before sending a request, your tool will have to calculate the signature of the request with the request body and the secret key.

- This signature is then added to the header of your request.

- The request is sent to the HrFlow.ai servers.

- Before launching the logic, the workflow will have to recalculate the signature with the request body and the secret key. It will have to compare the calculated signature with the one in the request header.

Keep the key secretBeware, this secret key is never shared and must be stored in your tool and in HrFlow.ai (Environment properties). It will never be communicated through the request.

If the signatures are different, then the request is compromised, and the logic should not be executed.

This can be explained in two ways:

- Either the request was sent by another server that does not have the key or at least the right secret key

- Either the body has been altered during transmission for a malicious reason or not

How to retrieve the value of the headers in the workflows ?

Once the request is received by the HrFlow servers, the headers and the body of the request are merged and added in the _request parameter.

Thus, for a request with :

- in the HEADER

hrflow-signature: abc123 - in the BODY (JSON)

{"reference": "jkl456"}

The value of _request will be {"hrflow-signature": "abc123", "reference": "jkl456"}

As you can see, the secret key shared between the two parties will have to be stored in the Environment properties. We will call it SIGNATURE_SECRET_KEY.

To finish this sub-section, here is the same workflow but this time with a signature system for the request.

import hmac

import hashlib

import json

from hrflow import Hrflow

def check_signature(request_signature, secret_key, request_body):

hasher = hmac.new(bytes(secret_key), bytes(request_body), hashlib.sha256)

dig = hasher.hexdigest()

return hmac.compare_digest(dig, request_signature)

def generate_response(status_code = 201, body = {}):

return dict(

status_code=status_code,

headers={"Content-Type": "application/json"},

body=json.dumps(body)

)

def recreate_body_from_request(_request):

# As header and body are merged in the same variable `_request`

# We must recreate the body

body = dict()

body["job_name"] = _request["job_name"]

body["job_reference"] = _request["job_reference"]

body["job_url"] = _request["job_url"]

body["job_summary"] = _request["job_summary"]

body["job_address"] = _request["job_address"]

body["job_latitude"] = _request["job_latitude"]

body["job_longitude"] = _request["job_longitude"]

body["job_start"] = _request["job_start"]

body["job_end"] = _request["job_end"]

body["job_salary_min"] = _request["job_salary_min"]

body["job_salary_max"] = _request["job_salary_max"]

return json.dumps(body)

def workflow(_request, settings):

# Check the signature

request_signature = _request.get("request-signature")

if request_signature is None:

return generate_response(status_code=400, body=dict(message="`request-signature` not found in the request headers !"))

recreated_body = recreate_body_from_request(_request)

if not check_signature(request_signature, settings["SIGNATURE_SECRET_KEY"], recreated_body):

# If the signature compute does not match with the sent signature

return generate_response(status_code=400, body=dict(message="Request compromised"))

# Start of our logic

client = Hrflow(api_secret=settings["API_KEY"], api_user=settings["USER_EMAIL"])

job_hrflow_obj = {

"name": _request["job_name"],

"reference": _request["job_reference"],

"url": _request["job_url"],

"summary": _request["job_summary"],

"location": {

"text": _request["job_address"],

"lat": _request["job_latitude"],

"lng":_request["job_longitude"]

},

"sections": [],

"skills": [],

"languages": [],

"tags": [],

"ranges_date": [{

"name": "dates",

"value_min": _request["job_start"],

"value_max": _request["job_end"]

}],

"ranges_float": [{

"name": "salary",

"value_min": _request["job_salary_min"],

"value_max": _request["job_salary_max"],

"unit": "eur"

}],

"metadatas": [],

}

client.job.indexing.add_json(board_key=settings["BOARD_KEY"], job_json=job_hrflow_obj)

return generate_response(body=dict(reference=_request["job_reference"]))

Don't forget to set the environment properties !In the environment properties, the following constants must be defined in order to use them with the

settingsparameter of the workflow function:

API_KEYUSER_EMAILBOARD_KEYSIGNATURE_SECRET_KEY

Step 4: Testing the Workflow

In this part, we will build a wrapper to launch the workflow on a test environment.

The parameters of theworkflowIn order to do this, we need to understand what parameters are taken by the

workflowfunction.

HrFlow.ai Workflows

Your environment

Your test environment must be under Python 3.6.

The allowed packages are :

hrflow==1.8.7requests==2.24.0selenium==3.141.0twilio==6.46.0scipy==1.5.1numpy==1.19.0mailchimp-transactional==1.0.22

You will find them in My Workflows > YOUR_WORKFLOW_NAME > Function > Function Code > Allowed packages.

The code is very simple:

import uuid

import datetime

request = dict()

request["job_name"] = "Data scientist"

# Generate Unique ID

request["job_reference"] = str(uuid.uuid4())

request["job_url"] = "https://hrflow.ai/careers/"

request["job_summary"] = "Data scientist at hrflow. What else ?!"

request["job_address"] = "Le Belvédère, 1-7 Cr Valmy, 92800 Puteaux"

request["job_latitude"] = 48.8922598

request["job_longitude"] = 2.232953

CURRENT_DATE = datetime.datetime.now()

DELAY = datetime.timedelta(days=42)

request["job_start"] = CURRENT_DATE.isoformat()

request["job_end"] = (CURRENT_DATE + DELAY).isoformat()

request["job_salary_min"] = 4000.0

request["job_salary_max"] = 5000.0

settings = {

"API_KEY":"YOUR_API_KEY",

"USER_EMAIL":"YOUR_USER_EMAIL",

"BOARD_KEY":"YOUR BOARD KEY"

}

workflow(request, settings)

Storing API KEYInstead of storing

API_KEYin plain text, you can usegetpass.getpass("Your API KEY:")import getpass settings = { "API_KEY":getpass.getpass("Your API KEY:"), "USER_EMAIL":"YOUR_USER_EMAIL", "BOARD_KEY":"YOUR_BOARD_KEY" }

Step 5: Deploying the Workflow

All you need to do is:

- Paste the code in My Workflows > YOUR_WORKFLOW_NAME > Function > Function Code.

- Define the environment variables in My Workflows > YOUR_WORKFLOW_NAME > Function > Function Code > Environment properties.

- Deploy

For more information

Step 6: Monitoring the Workflow

This feature is coming soon !

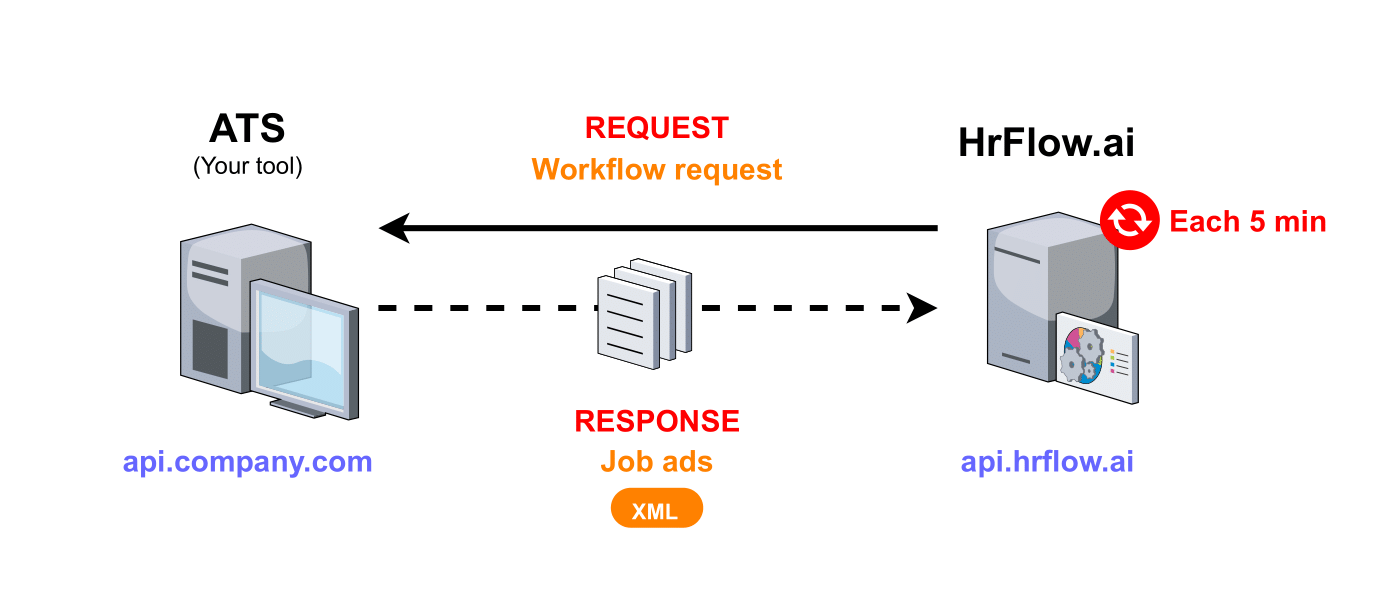

Case Study 2: Saving Job offers from an XML Jobs stream in HrFlow.ai

This diagram illustrates the communication between your company's ATS and HrFlow.ai

Step 1: Choosing the Trigger

To make the case study generic and keep it as simple as possible, we will communicate with a tool created for the demonstration.

We want to synchronize the database of jobs available in the ATS with the HrFlow database (Board).

Let's consider the following endpoint on the ATS side:

| Type | Value |

|---|---|

| URL (GET) | https://api.company.com/jobs |

| HEADER | Nothing |

| BODY | Nothing |

AuthenticationIn concrete cases, you should specify an authentication token in the URL parameter or an

X-API-KEYin the header.

The response returned by this request is an XML stream. Here is an example:

<?xml version="1.0" encoding="UTF-8"?>

<jobs>

<job data-id="1">

<name>Data scientist</name>

<reference>af42dd</reference>

<url>https://hrflow.ai/careers/</url>

<summary>Data scientist at hrflow. What else ?!</summary>

<address>Le Belvédère, 1-7 Cr Valmy, 92800 Puteaux</address>

<latitude>48.8922598</latitude>

<longitude>2.232953</longitude>

<start>2021-11-12T14:46:56.816369</start>

<end>2021-12-24T14:46:56.816369</end>

<salary>

<min>4000</min>

<max>5000</max>

</salary>

</job>

<job data-id="2">

...

</job>

...

</jobs>The fields for each element are defined in the following table:

| Field name | Description | Type |

|---|---|---|

| name | Job's name | String |

| reference | Job's reference | String |

| url | Application URL | String |

| summary | Job's summary | String |

| address | Job's Address | String |

| latitude | Job's latitude | Float |

| longitude | Job's longitude | Float |

| start | Job start date | String formatted as an ISO 8601 date |

| end | Job end date | String formatted as an ISO 8601 date |

| salary min | Minimum salary | Float |

| salary max | Maximum salary | Float |

Step 2: Pulling the XML stream

- Retrieve the stream content

To do this, we will make a request to your ATS and read the returned response:

import requests

STREAM_URL = "https://api.company.com/jobs"

response = requests.get(STREAM_URL)

if response.status_code != 200:

raise ConnectionError("ATS server does not want to give us the data stream !")

xml_stream_str = response.text- Now you have to parse the XML stream

import xml.etree.ElementTree

jobs_node = ElementTree.fromstring(xml_stream_str)

for job in jobs_node.findall("job"):

pass # TODO job.find("name").textStep 3: Building the Automation logic

It's time to code the internal logic of the Workflow.

As a reminder, this workflow is used to add a job in a Board HrFlow.ai (Create, Configure a Board).

First of all, it is necessary to have created a Board.

Board for this exampleIn the rest of the demonstration, we will use the following

board_key:5bd99cf57594e4f0e8feb038743368676bcdabac.

This key will be stored in the Environment properties of the workflow with the nameBOARD_KEY.

Next, you need to be able to connect the fields sent by your ATS and the appropriate fields to create a HrFlow.ai job.

Fields available for a jobYou can find the list of available fields for a HrFlow.ai job in the documentation API REFERENCE.

📖 The Job Object

Connector from the ATS fields to those of a HrFlow.ai object

job_hrflow_obj = {

"name": job.find("name").text,

"reference": job.find("reference").text,

"url": job.find("url").text,

"summary": job.find("summary").text,

"location": {

"text": job.find("address").text,

"lat": float(job.find("latitude").text),

"lng": float(job.find("longitude").text)

},

"sections": [], # deprecated

"culture": "",

"benefits": "",

"responsibilities": "",

"requirements": "",

"interviews": "",

"skills": [],

"languages": [],

"tags": [],

"ranges_date": [{

"name": "dates",

"value_min": job.find("start").text,

"value_max": job.find("end").text

}],

"ranges_float": [{

"name": "salary",

"value_min": float(job.find("salary").find("min").text),

"value_max": float(job.find("salary").find("min").text),

"unit": "eur"

}],

"metadatas": [],

}Workflow

import requests

import xml.etree.ElementTree

from hrflow import Hrflow

def connector_from_xml_to_json(job):

job_hrflow_obj = {

"name": job.find("name").text,

"reference": job.find("reference").text,

"url": job.find("url").text,

"summary": job.find("summary").text,

"location": {

"text": job.find("address").text,

"lat": float(job.find("latitude").text),

"lng": float(job.find("longitude").text)

},

"sections": [], # deprecated

"culture": "",

"benefits": "",

"responsibilities": "",

"requirements": "",

"interviews": "",

"skills": [],

"languages": [],

"tags": [],

"ranges_date": [{

"name": "dates",

"value_min": job.find("start").text,

"value_max": job.find("end").text

}],

"ranges_float": [{

"name": "salary",

"value_min": float(job.find("salary").find("min").text),

"value_max": float(job.find("salary").find("min").text),

"unit": "eur"

}],

"metadatas": [],

}

return job_hrflow_obj

def workflow(settings):

client = Hrflow(api_secret=settings["API_KEY"], api_user=settings["USER_EMAIL"])

# Get XML stream

response = requests.get(settings["STREAM_URL"])

if response.status_code != 200:

raise ConnectionError("ATS server does not want to give us the data stream !")

xml_stream_str = response.text

# Parse XML

jobs_node = ElementTree.fromstring(xml_stream_str)

# For each job in the stream

for job in jobs_node.findall("job"):

if int(settings["last_job_id"]) < int(job.attrib["data-id"]):

job_hrflow_obj = connector_from_xml_to_json(job)

client.job.indexing.add_json(board_key=settings["BOARD_KEY"], job_json=job_hrflow_obj)

settings["last_job_id"] = job.attrib["data-id"]Add only the jobs that are not yet synchronized

In the workflow, there are two lines to handle this:

if int(settings["last_job_id"]) < int(job.attrib["data-id"]):settings["last_job_id"] = job.attrib["data-id"]

We have a field in the Environment properties that tells us at each time what is the id of the last synchronized job. In our XML stream, we have arbitrarily defined adata-idattribute that increments automatically for each new job.

Another way to do this is to check that the job we want to add is not already on the board. To do this, we send a GET job/indexing request to find out all the jobs on the board, retrieve their reference and compare it with the reference of the job we are trying to add to the board.

Don't forget to set the environment properties !In the environment properties, the following constants must be defined in order to use them with the

settingsparameter of the workflow function:

API_KEYUSER_EMAILBOARD_KEYSTREAM_URLwithhttps://api.company.com/jobslast_job_idwith-1. This value will change during the execution of the workflow.To know more about it : HrFlow.ai Workflows

Step 4: Testing the Workflow

In this part, we will build a wrapper to launch the workflow on a test environment.

The parameters of theworkflowIn order to do this, we need to understand what parameters are taken by the

workflowfunction.

HrFlow.ai Workflows

Your environment

Your test environment must be under Python 3.6.

The allowed packages are :

hrflow==1.8.7requests==2.24.0selenium==3.141.0twilio==6.46.0scipy==1.5.1numpy==1.19.0mailchimp-transactional==1.0.22

You will find them in My Workflows > YOUR_WORKFLOW_NAME > Function > Function Code > Allowed packages.

The code is very simple:

settings = {

"API_KEY":"YOUR_API_KEY",

"USER_EMAIL":"YOUR_USER_EMAIL",

"BOARD_KEY":"YOUR_BOARD_KEY",

"STREAM_URL": "https://api.company.com/jobs",

"last_job_id": "-1"

}

workflow(settings)

Storing API KEYInstead of storing

API_KEYin plain text, you can usegetpass.getpass("Your API KEY:")import getpass settings = { "API_KEY":getpass.getpass("Your API KEY:"), "USER_EMAIL":"YOUR_USER_EMAIL", "BOARD_KEY":"YOUR BOARD KEY", "STREAM_URL": "https://api.company.com/jobs", "last_job_id": "-1" }

Step 5: Deploying the Workflow

All you need to do is:

- Paste the code in My Workflows > YOUR_WORKFLOW_NAME > Function > Function Code.

- Define the environment variables in My Workflows > YOUR_WORKFLOW_NAME > Function > Function Code > Environment properties.

- Deploy

For more information

Step 6: Monitoring the Workflow

This feature is coming soon !

Updated 9 months ago